Learning Monocular Dense Depth From Events

For futher details, you can check our github repository at siyuanwu99/DSEC

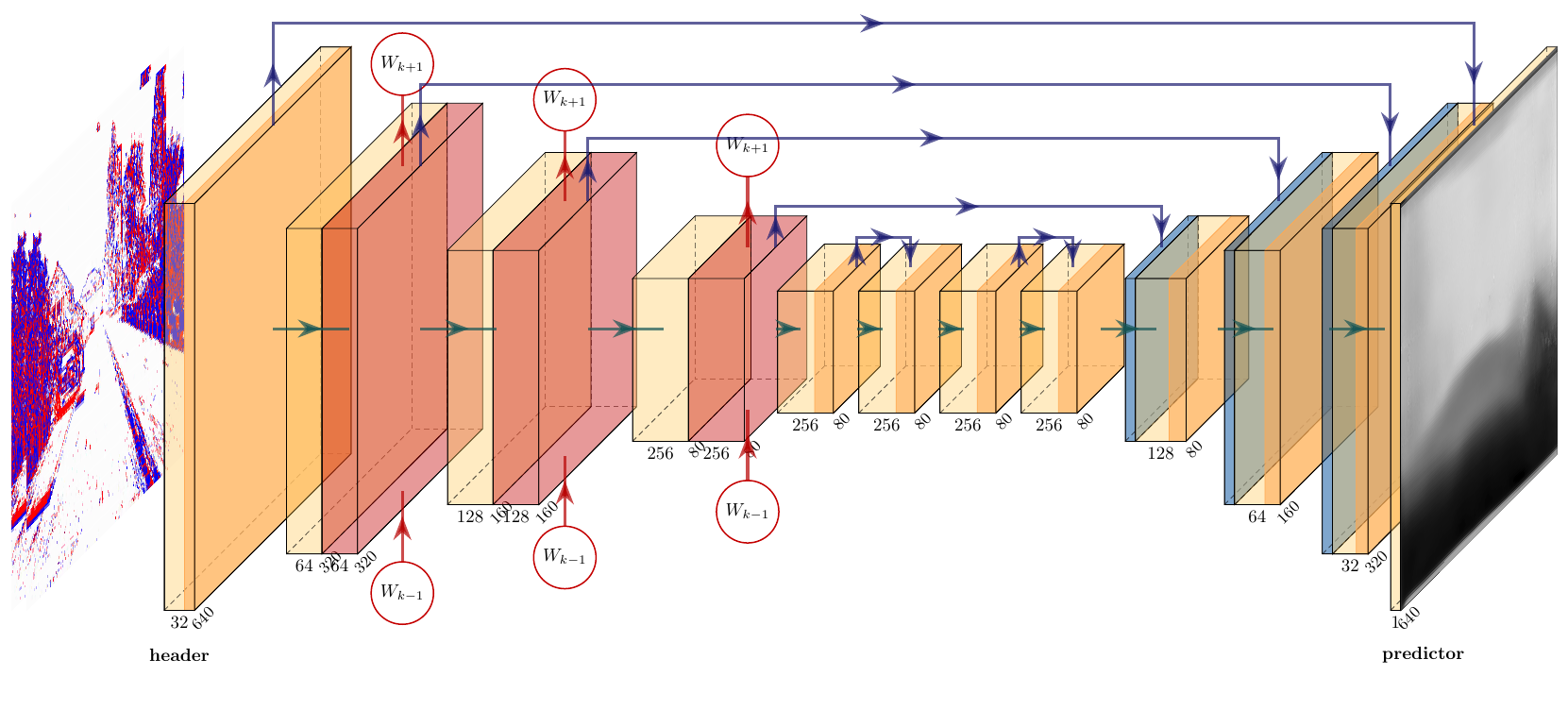

Network Structure

As shown in the figure above, the network we produced has a recurrent, fully convolutional structure. It can simply be divided as a header, an encoder, residual blocks, a decoder and a predictor.

This network structure draws on UNet, which has proven its potential on Frame-based Depth Estimation tasks. The skip connections between encoder and decoder make sure features are learned for contracting the image can be used for reconstruction. Therefore, it guarantees the performance for pixel-by-pixel prediction. Due to the flow mechanism of event data, the input voxel grid shares some information with other grids, therefore, LSTM is used to discover temporal features.

The header of this network is a 2D convolutional layer followed by Batch Normalization. The kernel size is set to be 5. The activation function is ReLU. It takes 15x640x480 event volumn as input. The encoder consists of three similar layers with different channel size. Each layer has a 2D convolutional layer and a ConvLSTM layer, which has a LSTM structure with a convolultional gate. The kernel size of convolutional layer is 5, and that of ConvLSTM is selected to be 3. After the encoder is 2 cascade residual layers with kernel size 3. In the residual layer there are 2 convolutional network with Batch Normalization. The activation function is ReLU. Summation is applied over the skip connection. The decoder has three similar layers with different output channel size. Each layer consists of an upsampling convolution and a normal convolution with kernel size 5. Finally, the network use a predictor to output, which is a depth-wise convolution with kernel size 1. This network applies summation over all the skip connections. States from the ConvLSTM will be used for the next event volumn.

Results

We trained our model on Tesla T4 GPU from Google Colab, with a memory of approximately 15 GB. We only finished 50 epoches training due to limited time and resources.

Lower value indicates better result for all metrics.

| Metric | Total Mean absolute error(MAE) | Total Mean square error(MSE) |

|---|---|---|

| Performance | 16.84 | 380.07 |

| Model types | Event based | Event based | Frame based |

|---|---|---|---|

| Metrics | Paper model[^7] | Our model | MonoDepth[^9] |

| MAE in 10m | 1.85 | 5.32 | 3.44 |

| MAE in 20m | 2.64 | 8.94 | 7.02 |

| MAE in 30m | 3.13 | 12.04 | 10.03 |

In the paper, they trained 300 epoches (127800 iterations) on the real world dataset consist of 8523 samples. Due to limited computation resources, we trained 50 epoches on a smaller dataset with 225 samples only. Each sample is a 50ms time window consist of 5,000 to 500,000 events. Though produced reasonable results, our model still underperforms the baseline models.

Results with SSIM Loss

We trained the model again with SSIM loss term. After only 7 epochs, we already observe lower metric errors. For comparison, we also overlay the orignal training metric curves without SSIM loss. The metric plots for the first 7 epochs are shown as follow. As you can see, the model with SSIM loss (dark blue) performs better than original model (light blue), which Mean Average Error for all stages decreases more slowly. With SSIM loss term, the metric errors descend to low values after training for only 7 epochs.

| Model | MAE | MSE | MAE in 10m | MAE in 20m | MAE in 30m |

|---|---|---|---|---|---|

| Model trained with SSIM loss | 13.84 | 339.28 | 3.22 | 4.83 | 7.85 |

| Original model | 20.43 | 616.21 | 5.08 | 9.09 | 13.43 |

Due to time limit, we did not train the model with SSIM loss again for 50 epochs. But the model metrics are already better than the previous results. This implies the SSIM loss could not only be used for training on image data from frame-based camera, but also be applied to event data.