Task Planning with PDDL Inference for TIAGo Robot

Simulation demo

Real-world demo

Documentation

Navigation

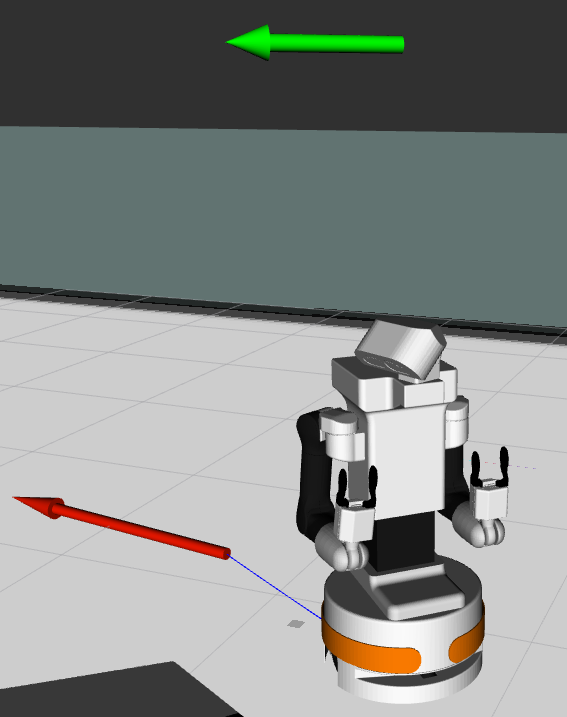

Navigation consists of driving the robot via the found path by the path planner to the goal. Most navigation is already integrated with the provided ROS packages for the TIAGo. In this project, a node is added called "navigation_point_direction" that will point the head towards the predicted direction the robot will drive. This way, humans around the robot will better understand where the robot will drive, thus making it safer. The second advantage is that the head will look to the new driving direction a bit in advance. This way, the sensors can detect obstacles earlier, and thereby the TIAGo can plan more efficiently.

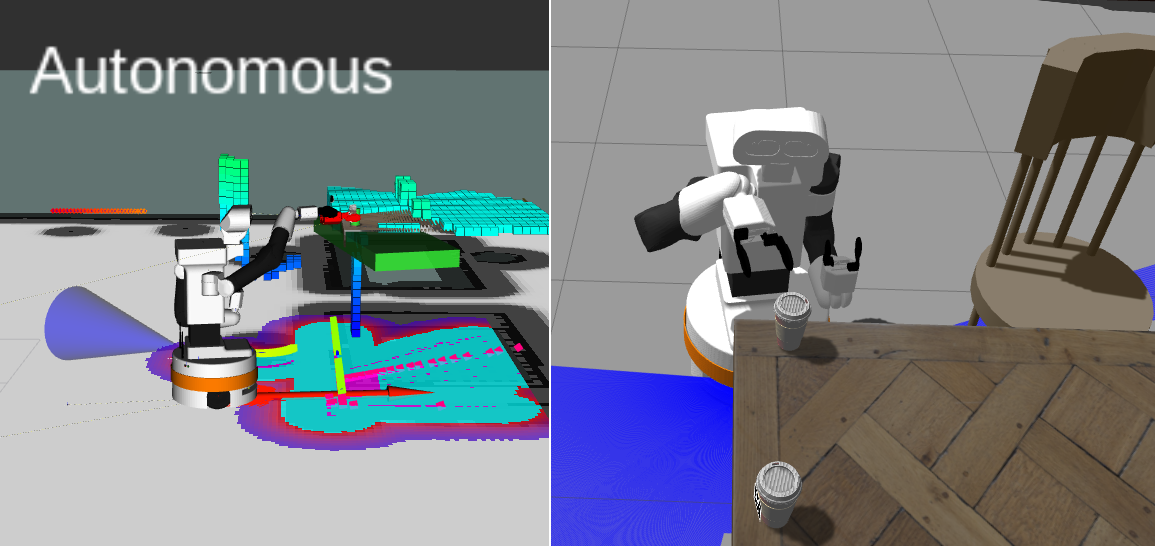

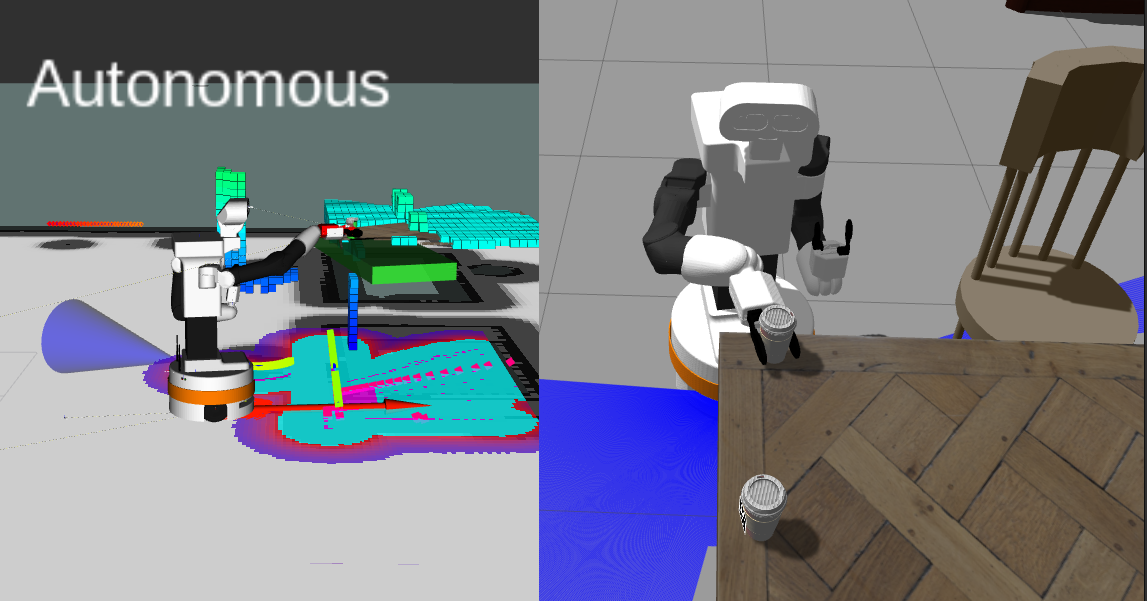

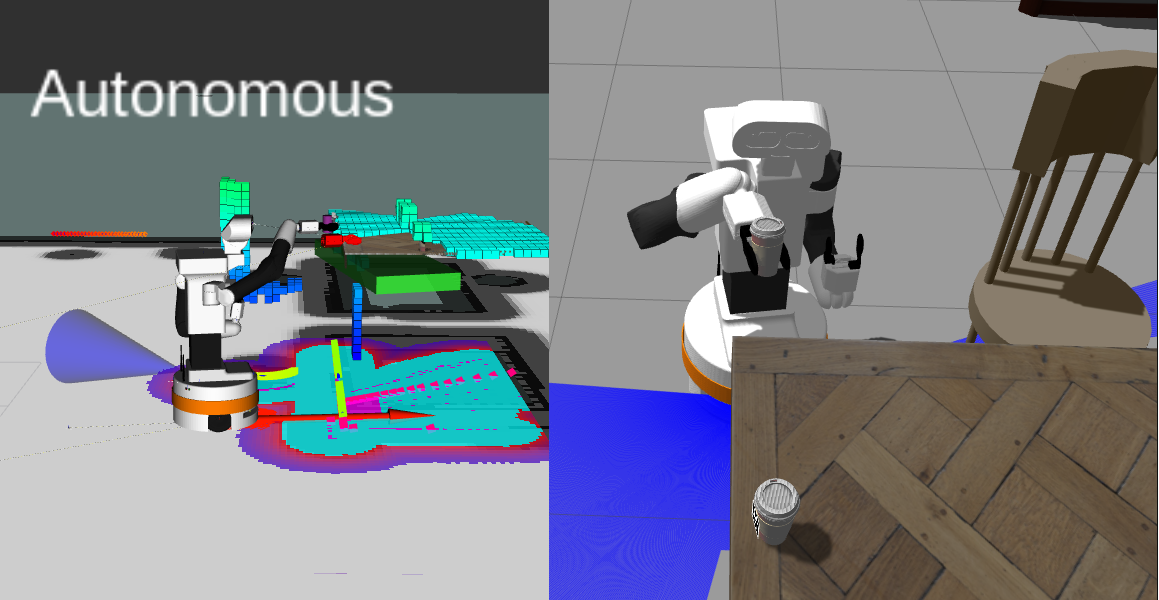

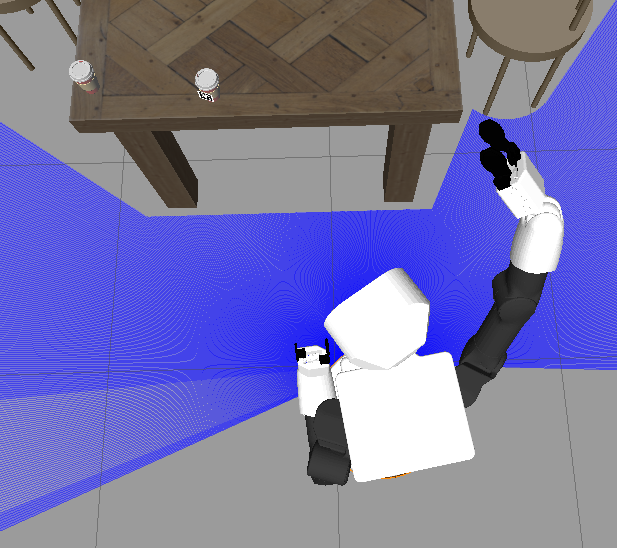

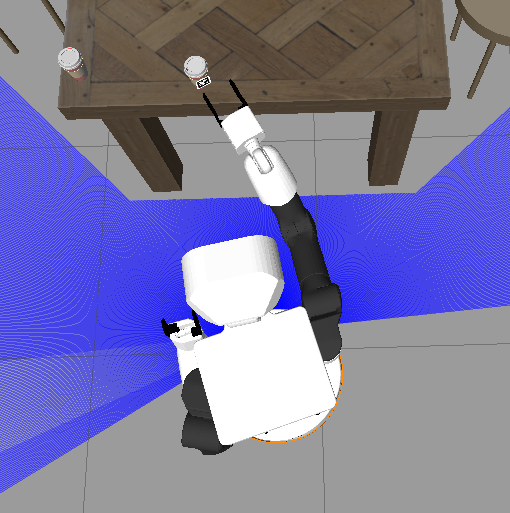

In the existing packages, a node publishes a vector of the predicted driving direction, and the Point Direction node reads this vector. The value is calculated to the head’s pitch and yaw rotation values. When the robot makes a sharp turn left or right, without moving forward, the head will look down and in that direction, as can be seen in figure [2]. The more the robot will drive forward and not turn, the more the head will look straight ahead. The head rotation is limited to +-70 degrees left and right, and +-50 degrees up and down. The calculation is robust to changing the sign of the angle, so if the vector points left, the head will always look to the left and visa versa. If the vector crosses 180 degrees, to the back, then the head will fast rotate from one side 70 degrees to the other side 70 degrees. In the current ROS program that will never happen as the robot never drives backwards, only rotation and forward. The head orientation is published to the head_controller topic, ensuring the head will move in the correct directions. The head controller action server is not used as this control is not crucial or time-dependent for other TIAGo functionalities. In the simulation, moving the head works fine when the robot drives and rotates. When the prediction vector is also plotted in RViz (as a green arrow), it is visible that the head rotations copy the behavior of the vector.

Path Planning

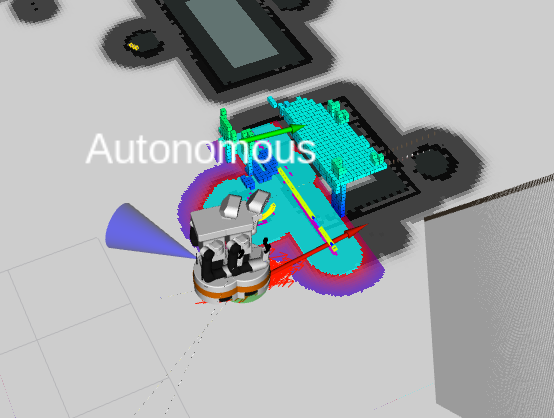

Path planning is to generate a collision-free trajectory from TIAGo’s current position to the goal. To avoid a collision, the robot should be able to estimate obstacles’ shape and position and build an occupancy map for the planner. In the package, a static occupancy map is created from laser data sampled by onboard laser and RGB-D camera. It can provide an accurate perception of static obstacles; however, it fails to work on dynamic obstacles. In our case, visitors are walking around TIAGo, so dynamic obstacle avoidance is essential. We implemented a simple obstacle extractor and tracker to estimate the position and velocity of these obstacles. First, laser inputs are converted to point cloud. Then, clustering is applied to these points to extract line segments and circles. Since point segments are only caused by walls or furniture, we discard them and only keep the circles. The radius of circles are obtained in this step. Then, an Extended Kalman Filter (EKF) is applied to these clusters to track their movement and estimate their accurate position and velocity. These observations are published to /obstacle_observer/obstacles topic whose message type is defined in the obstacle_observer package.

We use the default A* for global planning and an open-source package teb_local_planner for local planning. The goal of a global planner is to find a discrete path with colliding wall and table. For the local planner, it should refine the path considering the nonholonomic constraints of the robot base and avoid dynamic obstacles. The teb_local_planner package enables these functions 1, but the input message type has to be ObstacleArrayMsg from costmap_converter package. We implemented a dynamic_obs_node as a bridge between two packages. It subscribes to /obstacle_observer/obstacles and publish to /move_base/TebLocalPlannerROS/obstacles.

Although the idea seemed good, after implementation we found that the algorithm was not robust. In simulated tests, the laser detection either missed the visitor or treated the chair as a visitor, regardless of the parameters we set. This success rate was too low (< 30%) to be used in the final system. In the end we decided to use move_base package and only consider static obstacles.

Picking Sequence

The main purpose of the picking sequence is to execute several robot actions as a whole, e.g. search cups, pick cups, move to the recycling bin, and place cups inside the bin. All robot behaviors related to the cup are encapsulated in this ROS node. These behaviors are implemented by ROS action servers. This /sequence_node will run the ROS action client corresponding to the standalone functions. It is launched when the user presses the "searching cups" button on GUI. Once started, it will first run a search client to find the nearest coffee cups by AprilTag markers on the cup. Next, the robot will run a move client to approach the detected coffee cup and prepare to pick. Then, a pick client is called to prepare the picking action and finally pick the detected coffee cup. If the cup is successfully picked, another move client is called to drive the robot to the recycling bin. Finally, the robot will run a place client to place the cup inside the bin. Once the program was interrupted with some errors, e.g. TIAGo failed to pick the cup, or no cups were found, the /sequence_node will terminate with some error code.

Search server

Search Server is a ROS action server we build from scratch for searching coffee cups. It uses a self-defined message type named as Search.action for communication between server and client. Similar to other action messages, it consists of a goal, a result, and feedback. The goal is an integer that indicates the number of objects to search for. The result contains a boolean variable representing success or failure and an integer variable representing the ID of the detected AprilTag marker. This ROS action server subscribes to /tag_detection provided by the existing AprilTag_ros package. It maintains a ROS service waiting to be called by the ROS action client. Once called, the server will execute commands in 3 stages. First, TIAGo will scan the entire area by turning its head and looking around. Simultaneously, TIAGo will receive and analyze detection results from /tag_detection and save the position and orientation of one selected AprilTag marker. Then, the robot will apply a homogeneous transformation to compute a collision-free waypoint in front of the marker in the map frame. This waypoint is set to be 0.78 meters ahead of the coffee cup to be picked. Finally, TIAGo will send this waypoint to move_base and will move to it.

Pick server

is a ROS action server for path planning in cup picking. It utilizes moveit2, an open-source project for robot arm planning. Similar to Search Server, it subscribes to /tag_detections topic as well. It also subscribes to several ROS service, e.g. add_collision_object and remove_collision_object to build and clean the occupancy map. This map helps to find a collision-free trajectory for the TIAGo gripper to grasp the cup. Another ROS server it subscribes to is get_grasp_pose, which provides the accurate grasp position when the robot is working on a coffee cup.

The pick server should receive requests from the pick client in the sequence_node. The request should contain the exact ID of the coffee cup detected by the search server The entire picking process can be separated into eight states.

-

Look around. TIAGo’s head iterate from 7 preset poses, covering all areas it can see to find and locate the request AprilTag marker.

-

Add collision objects. TIAGo utilizes its RGB-D camera to build an occupancy map including cups and the table.

-

Compute grasp position. Based on the position and orientation of the AprilTag marker in the camera frame, TIAGo computes the gripper’s grasp poses and transforms it into the base frame. The grasp pose is the pose of the gripper to pick the object. Pre-grasp pose and post-grasp pose should be easy to reach safely.

-

Move to pre-grasp pose. TIAGo first raises its right arm, then slowly moves the gripper to the pre-grasp position.

-

Move to grasp pose. TIAGo puts its gripper out to the cup.

-

Close the gripper and attach the cup.

-

Move to post-grasp pose.

-

Remove collision objects. The occupancy map is cleaned, and robots can move to other positions

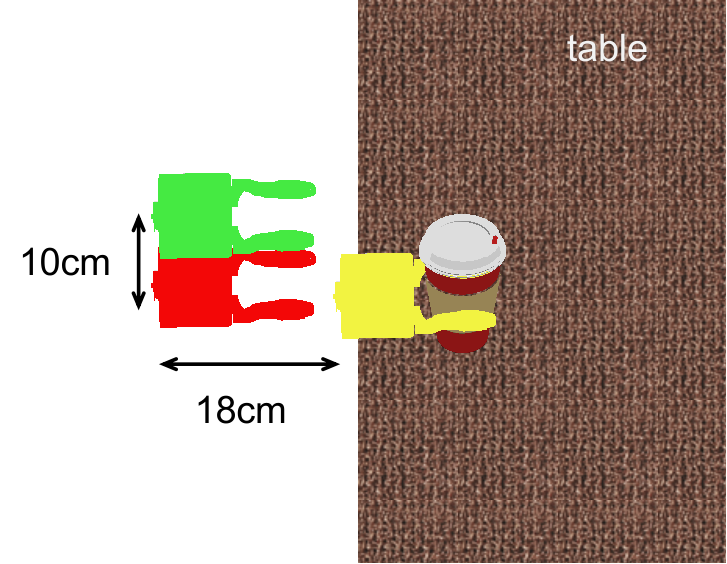

Place Server

This part contains the ROS action server for placing the coffee cup in the recycling bin. Due to the location of the bin being fixed, we do not need any AprilTag marker to tell TIAGo where the gripper should move. We use a fixed gripper pose hard-coded in /sequence_node, sent to the place server as a goal. The gripper pose is 0.60 meters in front of the robot, 0.10 meters to the right, and 0.4 meters high in the base_link frame. After tuning in the simulation, we found that this is the best parameter setting for placing cups into the bin. Similar to picking, the place server can be divided into three steps. First, TIAGo looks around and builds an occupancy map of the environment. Second, TIAGo moves its arm to the placing pose and opens the gripper to release the coffee cup. Finally, TIAGo tucks its arms and clears the occupancy map.

Performance

We test the performance of search-pick-place sequence in the simulation environment. We modified the position and orientation of the coffee cup and started the robot in different positions to test the performance of our robot in different environments.

As shown in the table, our node reaches 60% success rate in search, pick and place task. As for search server, the success rate is 100%. This is because it utilizes the AprilTag marker, which guarantees its stability and accuracy. For pick server, the success rate is 60%, which is the lowest among three implemented servers. For place server, the success rate is 100%. Due to we use a fixed position of the coffee bins and there are no obstacles between the table and the bin, placing task is relatively easy. It’s obvious that if pick server succeeds, the place server will succeed.

| Number | Search | Pick | Place |

| 01 | ✅ | failed: grasp | |

| 02 | ✅ | failed: pre-grasp | |

| 03 | ✅ | ✅ | ✅ |

| 04 | ✅ | ✅ | ✅ |

| 05 | ✅ | ✅ | ✅ |

| 06 | ✅ | failed: pre-grasp | |

| 07 | ✅ | ✅ | ✅ |

| 08 | ✅ | failed: grasp | |

| 09 | ✅ | ✅ | ✅ |

| 10 | ✅ | ✅ | ✅ |

Experiments in simulation environment

As indicated in the table, pick server is the bottleneck of this node. The failure reason of pick server is complex. For test 01, 02, 06, picking failed because the orientation is not suitable. In our node, we only managed to use the right gripper to pick objects, and we use the orientation of AprilTag markers to compute the pre-grasp and grasp pose. Therefore, when the AprilTag marker is orienting to the left of the robot , the robot may not find a feasible trajectory to move the cup, and thus, picking will fail.

For test 08, the distance between the robot and the coffee cup is too large. Therefore, robot’s gripper cannot reach the cup when it fully strengthens its arm. Although it has already reached the pre-grasp pose, however, it has some problem to reach the grasp pose so that picking failed.